make real, the story so far

Our tldraw + GPT-4V experiment broke the internet a little.

Ever wanted to just sketch an interface, press a button, and get a working website? Well, now you can at makereal.tldraw.com.

And here’s the story.

Try it out

…but first, maybe you should try it yourself. Here’s how to do it:

-

Get an OpenAI developer API key (you must have access to the GPT-4 API)

-

Visit makereal.tldraw.com

-

Paste your API key in the input at the bottom of the screen

-

Draw your user interface

-

Select your drawing

-

Click the blue Make Real button

In a few seconds, your website will appear on the canvas. You can move it around and resize it. You can even draw on top of the website , click Make Real again to generate a new result with your annotations.

How it started

At this year’s OpenAI dev day, the company announced the release of its new GPT4 with Vision model, a large language model capable of accepting images as input. It can do more than the usual hotdog-or-not image recognition: you can send it a photo of a chessboard and ask for the next best move; ask it why a New Yorker cartoon was funny; or get it to summarize a whiteboard (more on that soon).

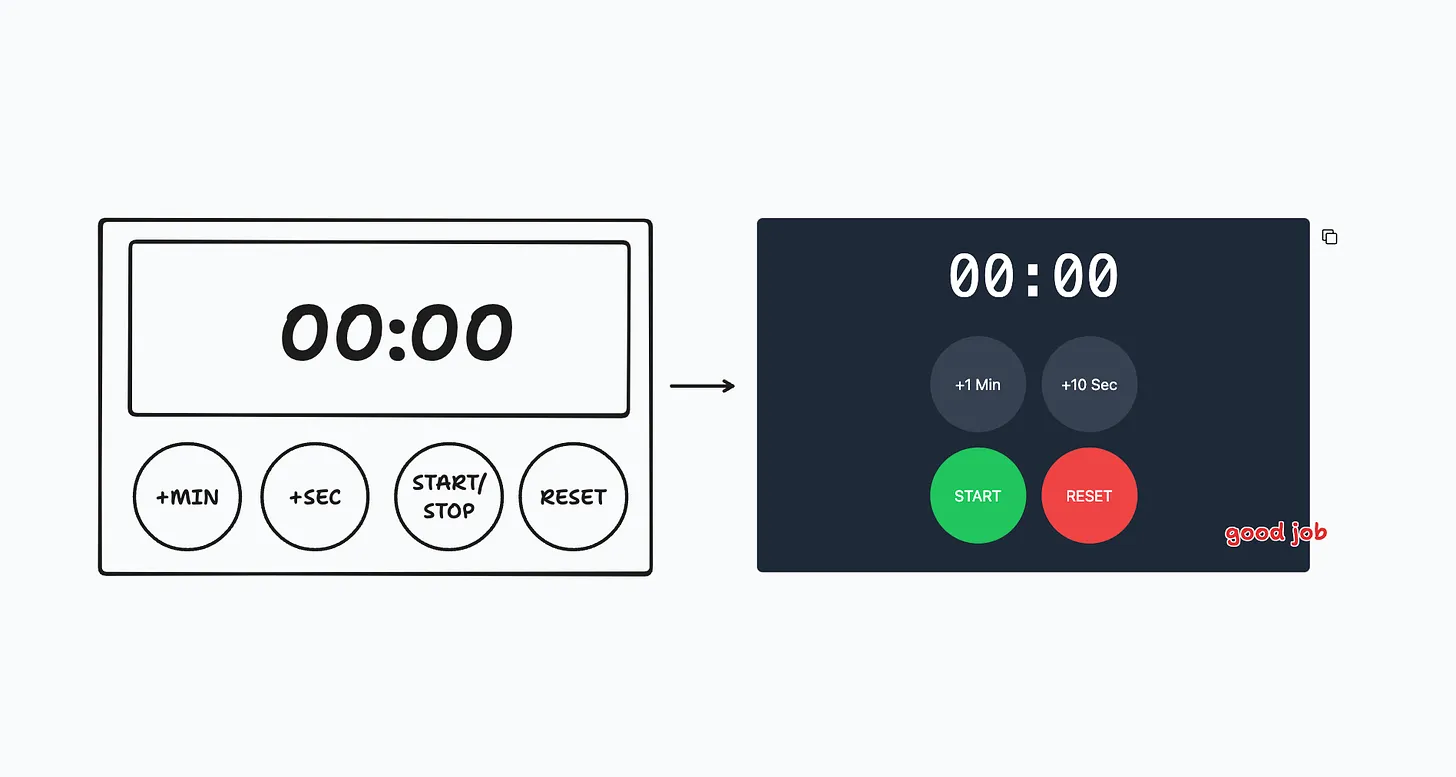

A day after the announcement, Figma engineer Sawyer Hood posted on X a video showing how he use the tldraw component to draw a user interface, export the drawing as a picture, send the picture to GPT-4V, and get back the HTML / CSS for a working website based on the drawing.

Coming on the heels of Vercel’s text-based user interface generator v0, this simple, clever, and easily hackable project was an instant hit. Writing two weeks later, the open source repository is already over 10,000 GitHub stars.

Meanwhile in London, at the tldraw office, we nearly fell over ourselves at the possibilities.

Back to the canvas

The next morning, we forked that repository and started making changes.

The first was obvious: put the website back on the canvas.

One of the web’s best tricks is the ability to embed one website in another website using the iframe element. In Sawyer’s demo, this iframe was in a popup. However, because tldraw’s canvas has always been made of regular HTML, its canvas can support iframes directly.

Suddenly we had a new loop: sketch a website and generate a working prototype, all without leaving the canvas. You could resize the website to explore its responsive breakpoints, arrange them together with other iterations, and enjoy all the thousand other benefits of canvas-based interactions.

Canvas conversations

On Wednesday, we took it further. Now that the website output was just another shape on the tldraw canvas, we could take it to the next step: marking up the website with notes and revisions, then sending it back as a new prompt.

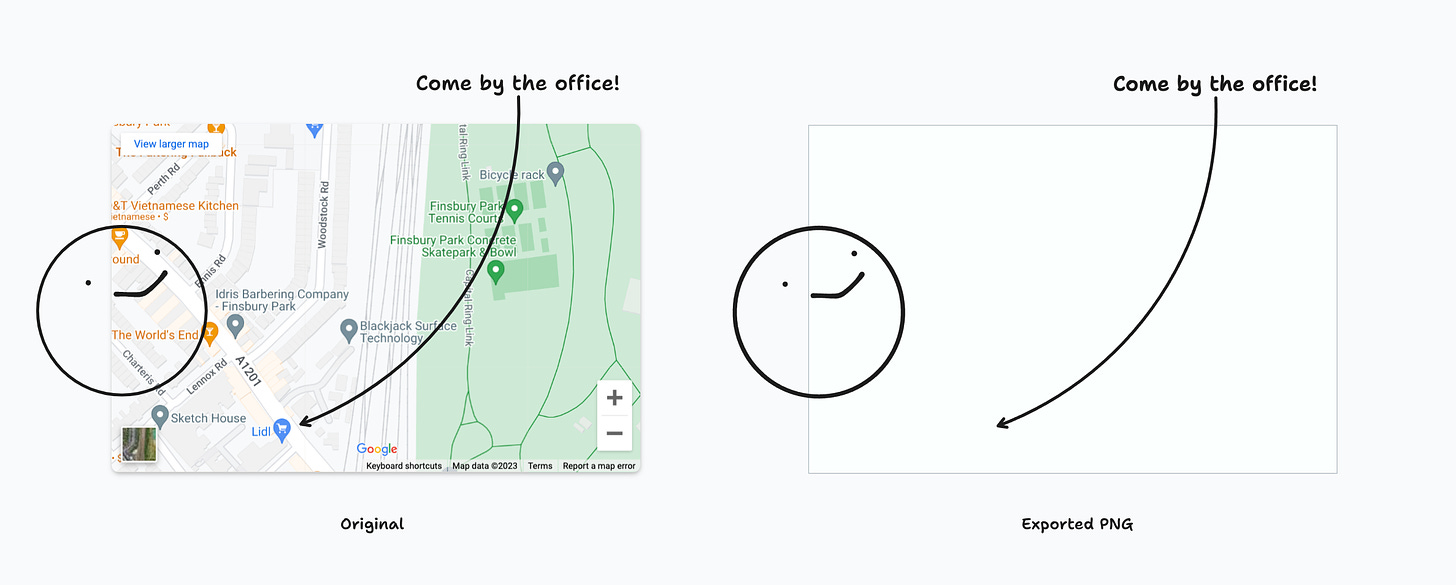

It’s incredible that this even works, because one limitation of the iframe element is that it’s essentially a black box to the website that contains it—there’s no way to see what’s inside the iframe. For this reason, embedded content shows up in our image exports only as empty rectangles.

So how do we get GPT4V to “see” what’s in the box? We give it back the previous result’s HTML, together with a note to “fill in” the white box with this provided HTML.

And it works.

The result is an iterative back-and-forth shaping of a design via markup, notes, screenshots of other websites, and whatever else you can fit onto a canvas—which in tldraw, means anything. Like a chat window, the canvas is transformed into a conversation space where you and the AI can workshop an idea together. In this case, the result is working websites, which is amazing enough, but it could work just as well for anything.

Ship it ship it now ship ship

On Wednesday night we stayed late and shipped makereal.tldraw.com (as well as a separate deployment domain, drawmyui.com). Up to now, the only people who could use the project were developers able to download and run the code on their own machine with their own API keys. But we wanted to get everyone involved.

It was of course a chaos release: the service’s rate limit kicked in immediately, causing the app to break for everyone. In desperation, we threw together a text input where users could input their own OpenAI developer API keys. This is usually a terrible idea and security risk but there was no other way. And at least we could point back to the source code to prove we weren’t harvesting keys.

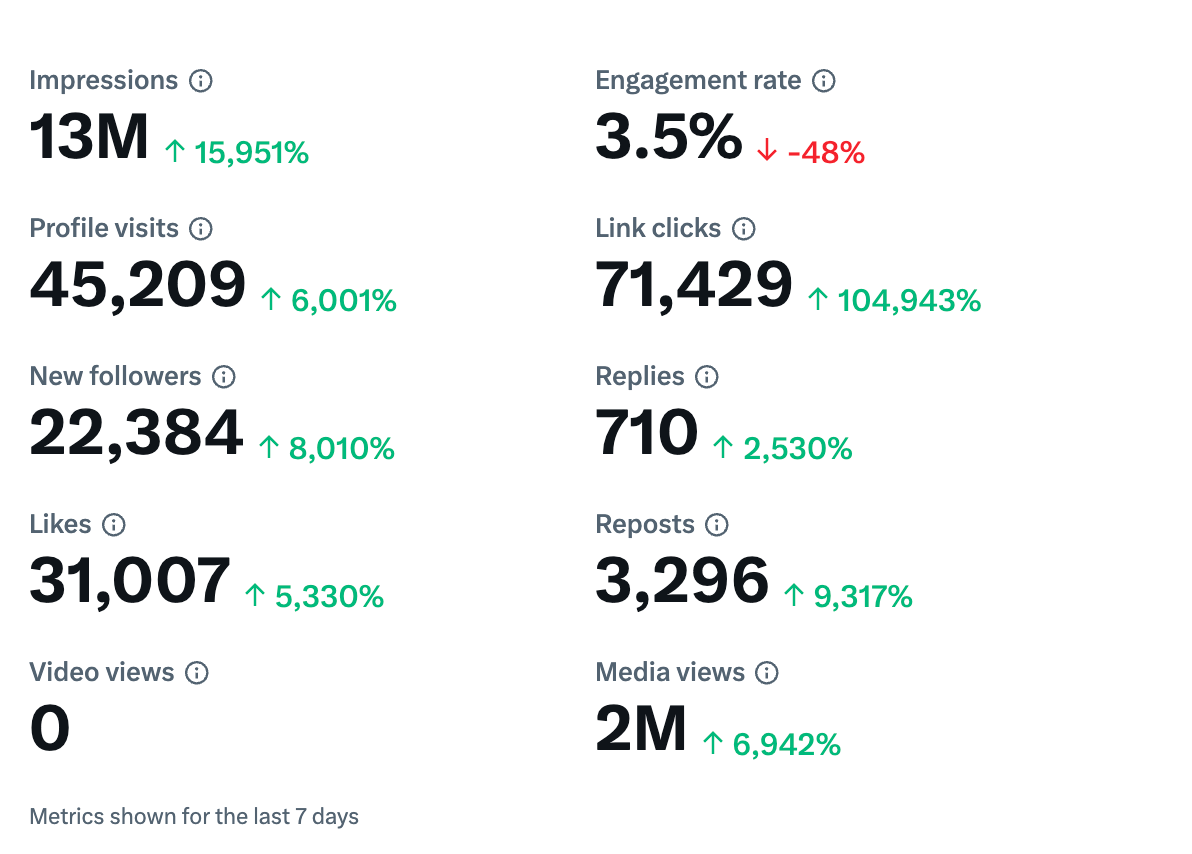

And the response was incredible.

Writing now, this release was roughly 72 hours ago. Since then, we’ve seen thousands of incredible posts, TikToks, and videos of people exploring this absolutely new possibility space. We couldn’t share it fast enough. We still can’t.

It’s been an absolute joy to see.

Wes Bos on YouTube From https://twitter.com/muvich3n/status/1725175294598750651 From https://twitter.com/hakimel/status/1725071370449469520 From https://twitter.com/multikev/status/1724908185361011108 From https://twitter.com/shuafeiwang/status/1725669747843330125See many many many more examples on the tldraw feed.

Make your own

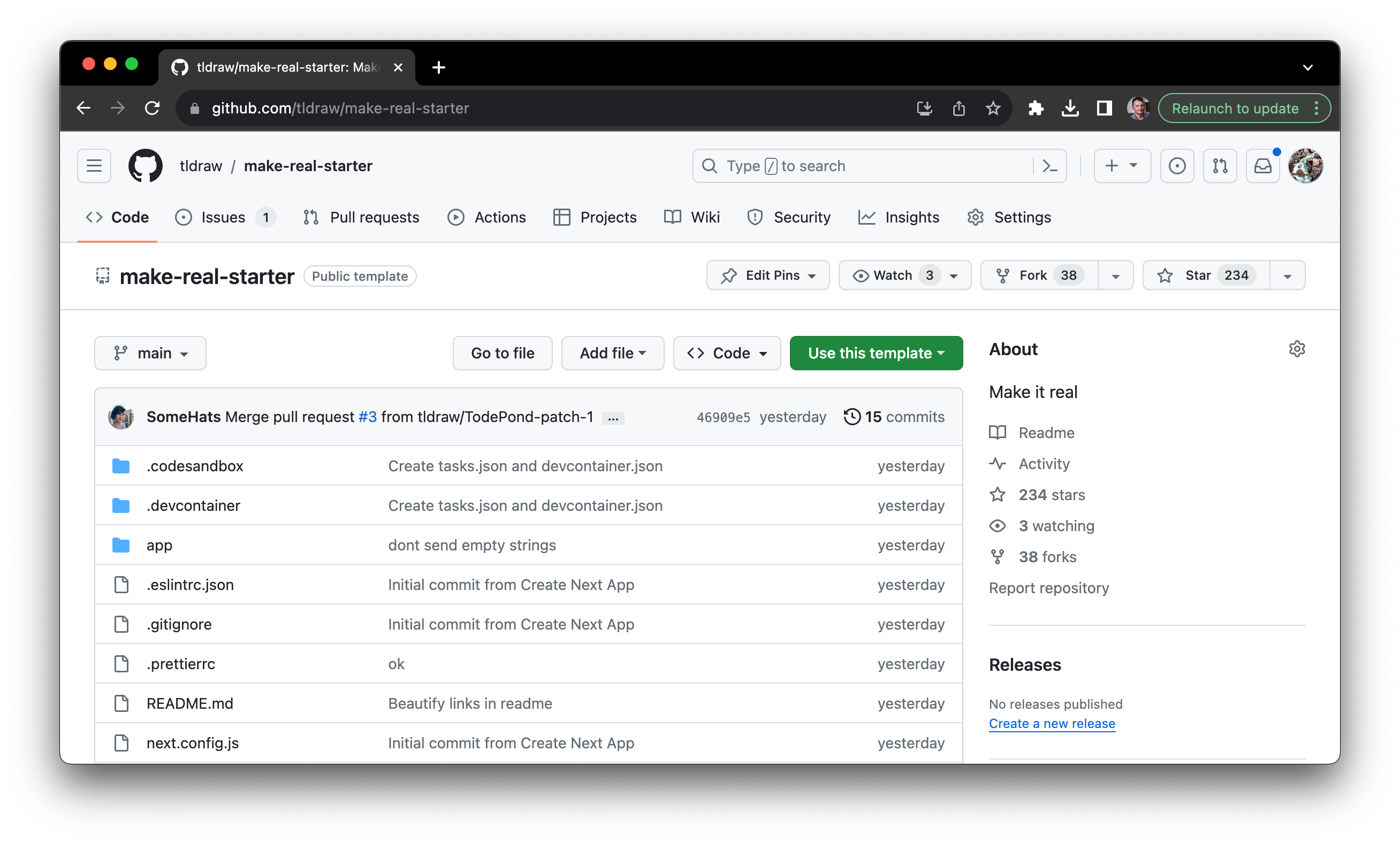

On Friday, we released a starter template that you can use to create your own experiments with tldraw and GPT-4V.

We’ve barely scratched the surface of what you can do with this technology. And we can’t possible explore it all. Our hope is that you can take this starter and run with it, too.

You’ve got a canvas that can hold the whole internet and an AI that can see and think.

What will you make?

Visit the make real site for the GPT-4V prototype. Visit the regular tldraw site for a free collaborative whiteboard. Click here to draw something with randos.

To connect with the community, join our Discord or follow us on Twitter or Mastodon. If you’re a developer, visit the GitHub repo or the tldraw developer docs. Press inquiries to press@tldraw.com.